AI product development in 2025 is no longer confined to the labs of large tech companies. With over 70% of organizations now investing in AI initiatives (Gartner, 2025) and more than 60% of new software products integrating AI capabilities from day one (IDC), the field is rapidly democratizing. The widespread availability of pre-trained foundation models, low-code AI platforms, and cloud-native AI tools has drastically lowered the barrier to entry. Whether you’re a startup, an enterprise, or a solo developer, building robust, scalable, and responsible AI products is now within reach. This article offers a deep technical dive into the end-to-end journey of AI product development, focusing on the tools, frameworks, processes, and best practices needed to build intelligent products in today’s ecosystem.

What is AI Product Development and How is it in 2025?

AI product development refers to the structured process of designing, building, testing, deploying, and maintaining software products that leverage artificial intelligence to deliver core functionality. Unlike traditional software development, AI product development is data-driven and often iterative, involving machine learning (ML), deep learning, reinforcement learning, and natural language processing (NLP) as essential components.

Whether you’re developing an AI-powered recommendation engine, a generative AI assistant, a computer vision system, or a predictive analytics dashboard, the foundation of successful AI product development lies in marrying data science with engineering and product thinking.

Let’s check some statistics now

Here are the credible statistics to look at.

- 76% of organizations are actively looking to use AI for new product and service innovation, according to a recent Gartner report based on over 400 enterprise CIOs (source: Lifewire)

- 70% of CDAOs (Chief Data & Analytics Officers) now lead AI initiatives in enterprises, a clear sign of shifting AI ownership toward data leadership. (source: aimagazine)

- Worldwide AI platforms software revenue is projected to quadruple—from $27.9 billion in 2023 to $153 billion by 2028—at a compound annual growth rate (CAGR) of 40.6%. (source: authority)

Some more

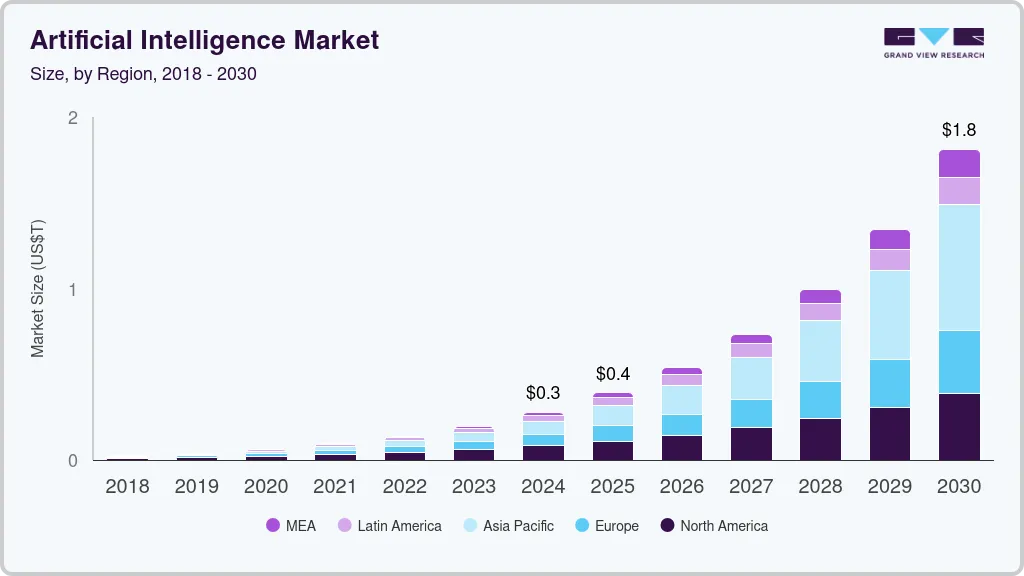

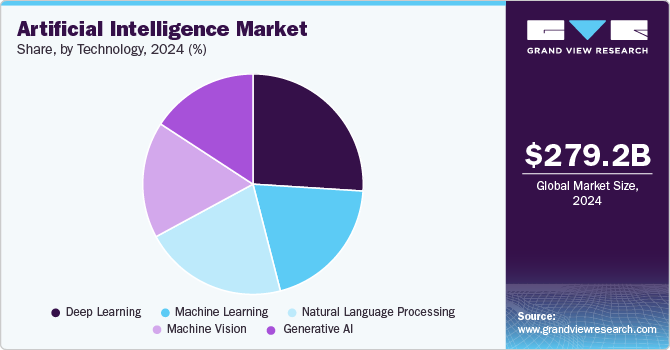

As we researched more, we found that in 2024, the global artificial intelligence (AI) market was estimated to be worth approximately USD 279.22 billion. With increasing innovation and investment from leading technology companies, the market is expected to expand significantly, reaching around USD 1,811.75 billion by 2030.

The growth represents an impressive compound annual growth rate (CAGR) of 35.9% between 2025 and 2030, as according to the report published in Grand View Research. The surge in AI adoption is being fueled by advancements across multiple sectors, including automotive, healthcare, finance, retail, and manufacturing.

In 2024, deep learning emerged as the dominant technology segment within the AI market, primarily due to its effectiveness in handling complex, data-intensive applications such as speech and content recognition. Its ability to process large-scale datasets efficiently continues to attract significant investment, with increased R&D spending by major tech companies accelerating adoption across industries.

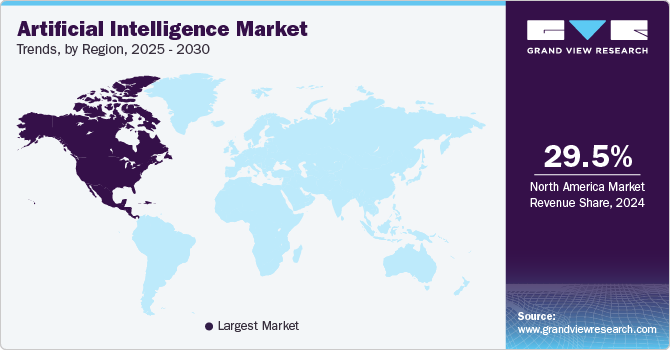

Regionally, North America emerged as the leading contributor, capturing roughly 29.5% of the total market share in 2024, with the U.S. market poised for substantial growth throughout the forecast period. On the solution front, software-based AI offerings dominated the industry, contributing about 35% to the global revenue. Operational functions accounted for the highest share among functional applications, while advertising and media were the leading sectors in terms of end-use adoption.

Notably, while North America holds the largest share, the Asia Pacific region is projected to experience the fastest growth over the coming years, driven by increasing digital transformation initiatives and AI-focused investments.

Further, check details on why your business needs AI Consulting Services now more than ever.

Now, let’s look at some challenges faced in AI product development.

Top 5 Challenges in AI Product Development

Despite tooling and advancements, AI product development still faces hurdles:

1. Data Quality and Availability

High-quality, annotated data is essential for training effective AI models, but access to such datasets remains a major barrier, especially in niche or regulated domains. Inconsistent, biased, or incomplete data can severely degrade model performance and trustworthiness.

2. Model Generalization in Real-World Settings

AI models often perform well in controlled environments but struggle when exposed to real-world variability. Factors like domain shift, edge cases, or unexpected user behavior can lead to degraded outcomes and model drift over time.

3. Latency and Cost of Inference at Scale

Deploying AI models in production, especially those based on large architectures, can lead to high compute costs and latency issues. Optimizing models for inference on edge devices or in real-time systems requires specialized engineering and hardware acceleration.

4. Cross-Functional Collaboration (PMs, Data Scientists, Engineers)

Successful AI product development demands tight coordination between product managers, data scientists, and software engineers. Misalignment in goals, timelines, or understanding of model capabilities can result in delays, rework, or failed deployments.

5. Regulatory and Ethical Compliance

As AI systems impact sensitive domains like healthcare and finance, adherence to legal and ethical standards becomes critical. Ensuring transparency, fairness, and accountability in AI decision-making is a growing concern, especially under regulations like the EU AI Act or HIPAA.

From Roadblocks to Results: AI product development isn’t just about building intelligent systems; it’s about navigating complexity with clarity. While the road is filled with challenges like poor data quality, latency issues, and compliance hurdles, each phase of development offers a built-in opportunity to solve them. Let’s walk through how these challenges can be tackled at every critical stage of the AI product development lifecycle.

Mastering AI Product Development Phase-by-Phase

Step 1. Problem Definition: Set the Stage with Responsible AI Thinking

Begin by translating business objectives into well-defined, solvable AI problems. Early engagement with cross-functional teams (PMs, engineers, compliance experts) ensures realistic scope and risk alignment. Embedding ethical principles from the start, like fairness and explainability, sets a solid foundation for regulatory compliance and public trust.

Step 2. Data Strategy: Build Better Data, Not Just More

Tackle the data quality and availability challenge by creating a data-centric pipeline. Use synthetic data generators and weak supervision tools (e.g., Snorkel, GANs) to enrich your training data. Implement robust data versioning and validation checks to maintain dataset integrity over time. Diverse data sourcing helps models generalize better, reducing real-world performance gaps later on.

Step 3. Model Training: Optimize for Accuracy, Generalization, and Efficiency

Use diverse training datasets and apply domain adaptation techniques to improve generalization. Implement model optimization strategies like quantization and distillation to reduce latency and inference costs. Adopt experiment tracking platforms like MLflow or W&B to streamline reproducibility, while testing for bias and robustness using fairness audit tools.

Step 4. Evaluation & Validation: Real-World Readiness Starts Here

Move beyond traditional metrics—evaluate models using adversarial inputs, edge cases, and fairness metrics. Incorporate continuous validation pipelines and shadow deployment environments to assess model behavior under live conditions without impacting users. These steps help identify and address gaps in generalization and performance early.

Step 5. MLOps: Streamline, Scale, and Monitor

MLOps frameworks are crucial to overcoming challenges in scaling, latency, and cross-functional collaboration. Use containerized deployments (Docker, KServe) and integrate CI/CD pipelines for models, just as you would with code. Real-time monitoring tools like EvidentlyAI and Arize AI enable the detection of drift, degradation, and compliance violations in production.

Step 6. Deployment: Serve Smart, Fast, and Cost-Effectively

Reduce latency and costs using model compression, edge deployment frameworks (TensorRT, ONNX), and serverless inference platforms. Implement failover strategies and input validation layers to keep AI services robust in production. Ensure your APIs are secure and scalable with proper versioning and authorization.

Step 7. Feedback & Continuous Learning: Keep Evolving

Turn real-world usage into a loop for model improvement. Use active learning to selectively label uncertain predictions and retrain models. Set up feedback ingestion pipelines that allow users or systems to correct predictions. This enables continuous adaptation, helping your AI product stay relevant and effective in changing environments.

By embedding solutions into each phase of AI product development, teams can go from being reactive to proactive, building not just smart products but resilient, ethical, and scalable AI solutions. The more tightly your development lifecycle integrates problem-solving, the faster and more confidently you’ll move from prototype to production.

Let’s check the details on securing the development.

Building Trust in AI, Architecting Secure AI Products

Developing a secure AI product involves embedding security and privacy best practices at every stage of the AI product development lifecycle—from data ingestion to model deployment and monitoring. Here’s how a secure AI product is developed:

a. Secure Data Handling

Security begins with the data. Teams must ensure that all data collected for training and testing is:

- Encrypted at rest and in transit

- Anonymized or de-identified to protect user identities

-

Audited for bias, consent, and compliance (e.g., GDPR, HIPAA)

Data access should be limited using role-based access controls (RBAC) and monitored with logging mechanisms.

b. Model Development with Security in Mind

During model training:

- Adversarial robustness testing is performed to prevent manipulation (e.g., image perturbation attacks).

- Use of secure sandbox environments to train models, especially when dealing with sensitive data.

- Implement model version control and governance, ensuring that only reviewed and signed-off models are promoted to production.

c. Testing for Vulnerabilities

Before deployment, the AI product should undergo:

- Security-focused model evaluation (e.g., behavior under adversarial input)

- Threat modeling of the AI pipeline using tools like STRIDE

- Penetration testing on APIs and endpoints where models are exposed

d. Secure Deployment & Inference

At deployment:

- Use encrypted APIs (HTTPS with OAuth2/JWT authentication)

- Leverage containerized environments (Docker, Kubernetes) with proper network policies

- Monitor for model drift, inference anomalies, and malicious usage patterns using AI observability tools

e. Ongoing Governance & Monitoring

Security is not a one-time task. Post-deployment:

- Enable real-time logging, alerting, and auditing

- Apply access controls to models and data endpoints

- Incorporate human-in-the-loop (HITL) systems where AI outcomes have high impact (e.g., healthcare, finance)

By integrating these measures, organizations can ensure their AI products are not only innovative and intelligent but also secure, compliant, and trustworthy, ready for enterprise-grade deployment in today’s risk-aware digital landscape.

Now let’s go through some trends.

Emerging Trends in AI Product Development (2025)

AI product development in 2025 is not only evolving, it is being reimagined by groundbreaking trends that reshape how intelligent systems are designed, deployed, and experienced. These trends are making AI more accessible, agentic, multimodal, and seamlessly embedded into the digital world.

1. Agentic AI Systems

One of the most transformative trends in 2025 is the rise of agentic AI systems that don’t just respond to queries but proactively plan, reason, and take actions across tools and environments to fulfill high-level goals. Unlike traditional task-specific models, agentic AI products can autonomously break down complex goals into subtasks, decide which APIs to call, when to pause for human input, and how to iterate over feedback loops.

Technical Enablers:

- LangGraph, CrewAI, AutoGen, and OpenAgents for orchestrating goal-driven AI workflows.

- Tool calling APIs like OpenAI Functions, Anthropic Tools, and HuggingFace Agents.

- Long-term memory integration using vector databases (Pinecone, Weaviate, LanceDB).

Use Cases:

- AI operations co-pilots

- Autonomous coding assistants

- Supply chain optimizers

- Personalized AI tutors

2. Multimodal AI Products

Modern AI products are moving beyond single-mode interaction. In 2025, multimodal models like GPT-4o, Gemini, and Claude 3 Opus enable products to understand and generate across text, vision, speech, and even video. This makes it possible to build deeply interactive and context-rich AI experiences.

Technical Capabilities:

- Visual Q&A, document parsing, and image captioning

- Audio transcription and voice-based instruction

- Multi-turn dialogue that incorporates screenshots, video frames, and spoken input

Development Impact:

Multimodal capabilities reduce the need for separate models and pipelines, simplifying AI product development and unifying data modalities under a single interface.

3. AI-Native UX Design

With the advent of smarter AI models, traditional UI paradigms are giving way to AI-native experiences. Products in 2025 are increasingly built around voice-first, chat-first, and proactive co-pilot interfaces. These interfaces allow users to engage in natural, unstructured ways—where AI understands intent and adapts the interface dynamically.

Design Trends:

- Conversational UI patterns for forms, dashboards, and queries

- Micro-agents embedded into every part of the UI

- Context-aware personalization using memory and user profiling

Tools Used:

- Rasa, Voiceflow, Retell.ai for conversational flows

- React + LangChain for building interactive LLM UIs

4. Low-Code/No-Code AI Development

The democratization of AI continues to accelerate. In 2025, low-code and no-code AI platforms enable non-technical users to train models, define pipelines, and build end-to-end AI-powered applications—without writing a single line of code.

Platforms Empowering Citizen Developers:

- Google AutoML, Akkio, Make.com (Integromat), Lobe, Peltarion

- Flowise, Langflow, and Promptly for no-code LLM agents

Benefits:

- Faster prototyping and deployment

- Reduced development costs

- Business domain experts can directly build AI workflows

5. AI + IoT Convergence

AI is becoming embedded at the edge, thanks to compact, efficient models and specialized inference chips. From smart thermostats to industrial robots, AI + IoT convergence is enabling real-time, offline, and privacy-preserving decision-making at the edge.

Technical Enablers:

- TinyML for deploying compressed models on microcontrollers

- ONNX Runtime, TensorFlow Lite, NVIDIA Jetson, and Coral Edge TPU

- Federated learning for edge-based continuous training

Use Cases:

- Predictive maintenance in manufacturing

- Real-time analytics on wearable health devices

- Smart agriculture systems with environmental sensors

Click the link to find details on Agentic AI development.

Tools and Frameworks for AI Product Development

To keep up with the demands of 2025’s AI landscape, development teams rely on a modern stack of AI tools, libraries, and cloud-native infrastructure:

| Development Stage | Tools & Frameworks |

|---|---|

| Data Collection & Labeling | Labelbox, Snorkel, Prodigy, Roboflow, SuperAnnotate |

| Data Engineering | dbt, Apache Kafka, Apache Arrow, Airbyte, LakeFS |

| Model Training & Tuning | PyTorch, TensorFlow, Hugging Face Transformers, AutoML, Ray Tune, FastAI |

| Experiment Tracking | Weights & Biases, MLflow, Comet.ml |

| Model Deployment & Serving | Docker, KServe, BentoML, Seldon Core, ONNX Runtime, NVIDIA Triton Inference Server |

| MLOps & Orchestration | Kubeflow, Vertex AI, Azure ML, Flyte, Airflow, LangChain, LangGraph |

| Monitoring & Drift Detection | Arize AI, WhyLabs, EvidentlyAI, Fiddler |

| LLM Tooling & Agent Frameworks | LangChain, CrewAI, AutoGen, Semantic Kernel, OpenAgents, Flowise, LangFlow |

| Conversational UI & Voice | Rasa, Retell, Dialogflow CX, Voiceflow |

| Security & Governance | Truera, IBM Watson OpenScale, Microsoft Responsible AI, Nvidia NeMo Guardrails |

Conclusion

AI product development in 2025 is both a science and an art. It demands expertise in software engineering, data science, DevOps, UX design, and regulatory understanding. With the rapid pace of evolution in AI hardware (e.g., NVIDIA Blackwell, AWS Trainium), foundation models, and open-source innovation, companies that invest in scalable, ethical, and intelligent AI product development pipelines today are best positioned to lead tomorrow.

Whether you’re building autonomous agents, healthcare AI tools, financial forecasting engines, or personalized edtech platforms, this is the golden era of AI product development. The fusion of innovation and responsible engineering is no longer optional—it’s the foundation of all impactful AI solutions.

At Emorphis Technologies, our AI experts bring deep domain knowledge, full-stack technical capabilities, and proven frameworks to help you accelerate every phase of your AI product development journey. From defining the right problem and building scalable data pipelines to designing agentic architectures, fine-tuning large language models, and deploying robust MLOps pipelines, we guide you from ideation to production.

Find more details on our AI software development services.

Whether you’re a startup experimenting with your first MVP or an enterprise scaling AI across multiple verticals, Emorphis Technologies ensures your AI products are ethically aligned, performance-optimized, and future-ready.