The conversation around SLM vs LLM has become one of the most important discussions in enterprise technology planning. As artificial intelligence in 2026 moves from experimentation to operational maturity, organizations are no longer impressed by model size alone. Instead, they are asking practical questions.

- Which AI model delivers consistent value?

- What approach aligns with compliance requirements?

- Which solution scales without escalating costs?

Large Language Models once symbolized the peak of AI innovation. However, Small Language Models are now redefining how artificial intelligence is in real business environments.

This article provides a comprehensive comparison of SLM vs LLM, explores real-world use cases, and explains why enterprises are increasingly choosing SLMs for domain-specific AI solutions and long-term AI strategies.

Let’s clear the understanding first.

What Is an LLM (Large Language Model)?

A Large Language Model serves as a foundational AI system that engineers train on massive datasets, including public internet content, books, research papers, code repositories, and conversational data. Developers build LLMs with billions or even trillions of parameters, enabling them to perform a wide range of general-purpose language tasks with high accuracy and flexibility.

LLMs actively answer questions, generate long-form content, write and debug code, summarize complex documents, and reason across diverse subject areas. Organizations rely on LLMs for their broad knowledge coverage, even though these models do not specialize deeply in a single domain.

In the landscape of artificial intelligence in 2026, enterprises increasingly use LLMs as base models to power chatbots, AI copilots, and exploratory intelligence systems. Teams deploy these models at scale by investing in robust infrastructure, enforcing strong governance frameworks, and managing ongoing operational costs to ensure secure and sustainable enterprise adoption.

What Is an SLM (Small Language Model)?

A Small Language Model is a compact, purpose-built AI model that teams train on curated, domain-relevant datasets. Unlike LLMs, organizations design SLMs to perform exceptionally well within a defined business context such as healthcare, manufacturing, finance, legal, or customer support.

SLMs use significantly fewer parameters, which allows teams to deploy them faster, operate them at lower cost, and integrate them more easily into existing systems. Enterprises often fine-tune SLMs or train them from scratch as part of a custom AI solution strategy, ensuring close alignment with internal workflows, regulatory compliance, and strict data privacy standards.

In the SLM vs LLM discussion, SLMs continue to gain traction as organizations prioritize operational efficiency, predictable performance, and domain-specific accuracy over broad, generalized intelligence. The following section highlights key differences between SLMs and LLMs to help enterprises evaluate the right model strategy.

SLM vs LLM: Architectural and Operational Differences

Understanding SLM vs LLM requires more than simply comparing model size. Instead, the real differences emerge across architecture, training philosophy, deployment models, and long-term operational impact. Moreover, these factors directly influence how enterprises adopt and scale AI systems.

Training Philosophy

LLMs are trained using broad, open-ended datasets to maximize general intelligence. In contrast, SLMs are trained on focused, high-quality datasets to maximize relevance, precision, and accuracy within a specific domain. As a result, SLMs align more closely with real-world business use cases.

Operational Control

SLMs also provide enterprises with greater control over data usage, model behavior, and output consistency. Additionally, teams can enforce stricter governance and validation rules. LLMs, however, often behave probabilistically, which can introduce unpredictability and risk in mission-critical workflows.

Cost Structure

LLMs typically demand continuous, high-volume compute resources. On the other hand, SLMs deliver predictable and manageable cost structures. This cost efficiency is especially important for enterprise AI adoption strategies in artificial intelligence in 2026, where sustainability and ROI drive long-term decision-making.

SLM vs LLM: Comparison Table

Dimension |

SLM |

LLM |

|---|---|---|

Model scope |

Designed for a clearly defined business function or industry use case. SLMs focus on depth rather than breadth, enabling reliable performance in domain-specific solutions of AI. | Built to handle a wide variety of topics and tasks across industries, prioritizing general intelligence over deep specialization. |

Parameter count |

Uses a smaller number of parameters, making the model easier to train, fine-tune, and maintain while ensuring faster inference. | Contains billions or trillions of parameters, requiring extensive compute resources and specialized infrastructure. |

Training data |

Trained on curated, proprietary, and enterprise-approved datasets that reflect real operational workflows and business rules. | Trained on massive internet-scale datasets that may include unverified, outdated, or irrelevant information. |

Accuracy in business workflows |

Delivers consistently high accuracy in repeatable enterprise tasks such as compliance checks, reporting, and decision support. | Accuracy varies depending on prompt quality and fine-tuning, making outputs less predictable in regulated workflows. |

Compliance readiness |

Easier to validate, audit, and certify for regulatory requirements, making SLMs suitable for healthcare, finance, and manufacturing. | Compliance is complex due to opaque training data, probabilistic behavior, and challenges in explainability. |

Infrastructure cost |

Offers predictable and controlled infrastructure costs, supporting long-term enterprise AI adoption planning. | High infrastructure and operational costs due to continuous demand for compute, memory, and networking resources. |

Latency |

Provides low-latency responses, making it ideal for real-time and near-real-time decision-making systems. | Higher latency due to model size and cloud dependency, which can impact time-sensitive applications. |

Deployment flexibility |

Can be deployed on-premise, on edge devices, or in private cloud environments, supporting data sovereignty and security. | Mostly deployed in public cloud environments due to hardware and scalability requirements. |

Suitability for a custom AI solution |

Highly suitable for building a custom AI solution that aligns tightly with enterprise workflows and business logic. | Often requires significant adaptation and governance layers to fit into a custom AI solution. |

Enterprise AI adoption fit |

Strong alignment with enterprise AI adoption goals, such as governance, scalability, and measurable ROI in artificial intelligence in 2026. | Best suited for selective use cases such as research, ideation, or generalized assistance rather than core operations. |

Why This Table Matters for Artificial Intelligence in 2026

This comparison clearly highlights a critical shift in the SLM vs LLM conversation. In Artificial Intelligence in 2026, enterprises no longer select models based on raw capability alone. Instead, they also evaluate operational reliability, compliance readiness, cost predictability, and long-term value creation.

Moreover, organizations increasingly position SLMs as the execution layer of enterprise intelligence, where accuracy, consistency, and governance matter most. LLMs, on the other hand, primarily serve as exploratory, assistive, or foundational tools that support ideation, discovery, and broad reasoning.

Therefore, understanding these detailed differences enables organizations to design AI strategies that support scalable enterprise AI adoption. Additionally, it helps teams build robust, domain-specific solutions that align with regulatory requirements, business workflows, and sustainable AI growth in Artificial Intelligence in 2026.

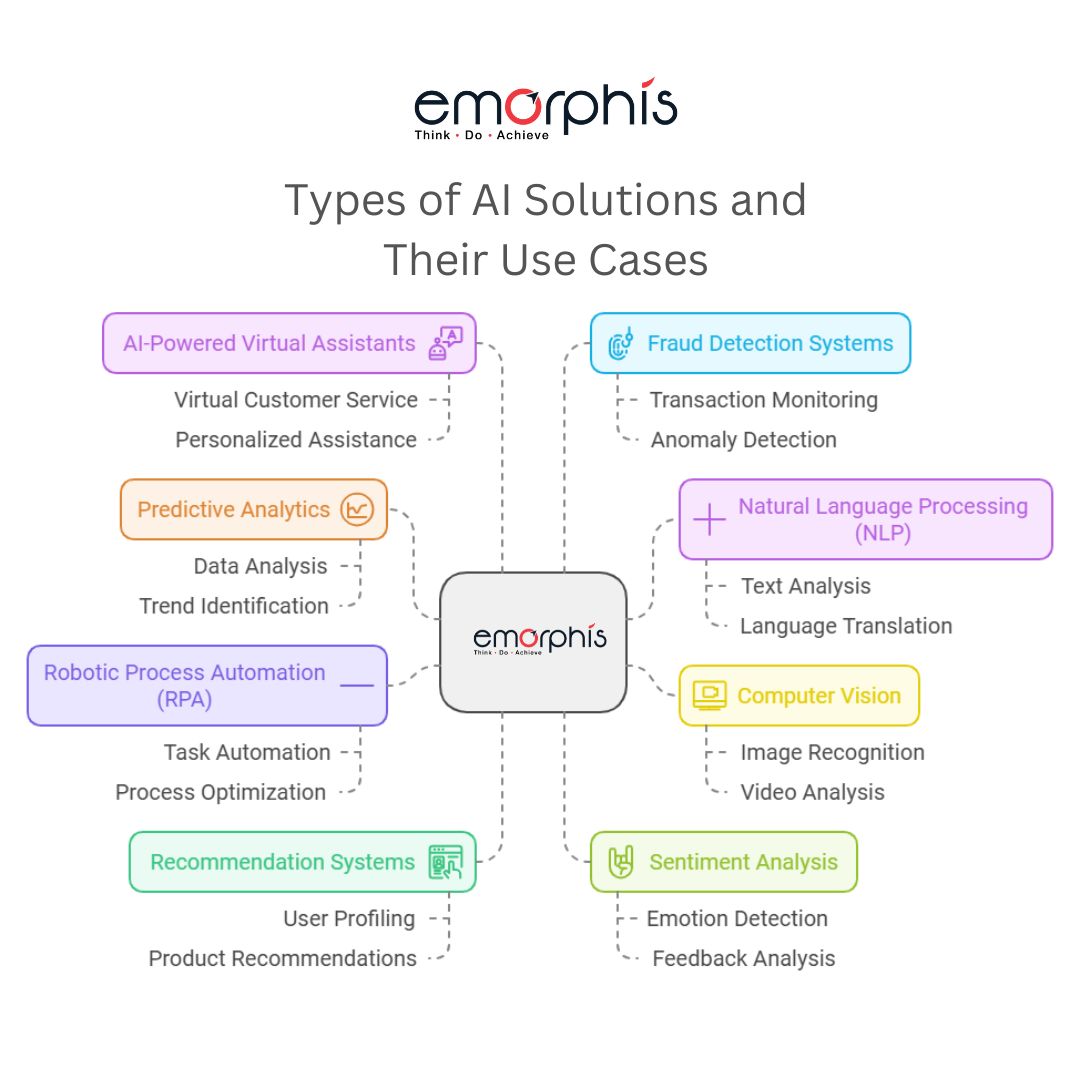

Use Cases of Large Language Models

Large Language Models continue to play a visible and influential role in artificial intelligence in 2026, particularly in scenarios that demand flexibility, creativity, and well-suited contextual understanding. Moreover, their ability to reason across diverse topics makes them well suited for exploratory and cross-functional initiatives. However, their primary strength lies in ideation and discovery rather than execution-heavy, tightly governed enterprise workflows.

Enterprise Knowledge Exploration

Large Language Models perform especially well when organizations need to explore massive volumes of unstructured data, such as emails, documents, presentations, research papers, and historical records. Additionally, when domain boundaries remain unclear or overlap significantly, LLMs can connect disparate information and surface meaningful insights.

For enterprises in the early stages of AI maturity, this capability supports discovery-driven use cases such as internal research, market intelligence, and innovation analysis. In the SLM vs LLM context, organizations often use LLMs as a starting point to understand which forms of intelligence they can later operationalize through more specialized and controlled models.

Content and Communication Automation

LLMs also excel at generating human-like text, which makes them highly effective for content and communication automation. Marketing teams rely on LLMs for campaign drafts, product descriptions, and social messaging. Similarly, technical teams use them for first-pass documentation, knowledge base content, and internal communications.

In artificial intelligence in 2026, enterprises increasingly deploy Large Language Models to reduce the manual effort associated with content creation. However, due to variability in tone, accuracy, and factual grounding, most organizations still enforce strong human review and governance layers before releasing LLM-generated content externally.

Developer Productivity

One of the most mature and widely adopted enterprise use cases for Large Language Models is developer productivity. LLM-powered tools actively assist engineers with code generation, refactoring, documentation, and debugging recommendations. As a result, development teams accelerate delivery timelines while reducing cognitive workload.

From an enterprise AI adoption standpoint, this use case delivers immediate and measurable productivity gains without deeply embedding AI into core business logic. Therefore, many organizations adopt LLM-driven developer workflows early in their AI transformation journey.

Multilingual and Global Operations

Because developers train Large Language Models on multilingual datasets, these models perform well in translation, localization, and cross-cultural communication tasks. Global enterprises use LLMs to support multilingual customer engagement, cross-region collaboration, and localized content production.

This capability also supports scalability across global operations. However, organizations must apply governance and validation mechanisms, especially in regulated industries where linguistic precision and contextual accuracy remain critical.

Strategic Role of LLMs in Enterprise AI Adoption

Collectively, these Large Language Model use cases often act as entry points for enterprise AI adoption. They enable experimentation, accelerate early value realization, and help organizations build confidence in AI capabilities before transitioning toward more specialized, domain-focused deployments within the broader SLM vs LLM strategy framework.

Use Cases of Small Language Models

Small Language Models dominate environments where accuracy, trust, and operational consistency are non-negotiable. In Artificial Intelligence in 2026, enterprises increasingly deploy SLMs as production-grade intelligence systems that integrate directly into core business workflows. Moreover, organizations rely on SLMs when governance, predictability, and domain alignment matter more than generalized reasoning.

Healthcare and Life Sciences

In healthcare and life sciences, SLMs actively support clinical decision workflows, medical documentation, and regulatory reporting with a high level of reliability. Teams train these models on validated clinical guidelines, approved protocols, and trusted enterprise datasets. As a result, SLMs significantly reduce the risk of hallucinations and incorrect recommendations.

Additionally, their controlled behavior and explainability make SLMs suitable for environments where patient safety, audit readiness, and regulatory compliance remain critical. Therefore, healthcare stands out as one of the strongest examples of domain-specific AI solutions powered by Small Language Models.

Manufacturing and Quality Systems

SLMs also play a critical role in manufacturing environments by supporting SOP execution, audit preparation, deviation management, and real-time quality checks. Organizations train these models on process documentation, quality standards, and operational data to ensure alignment with real-world workflows.

Unlike LLMs, SLMs can tightly align with shop-floor realities and operational constraints. Consequently, they deliver consistent guidance and actionable insights. In the SLM vs LLM discussion, manufacturing environments clearly favor SLM-based custom AI solutions.

Financial Services

In financial services, enterprises use SLMs for risk modeling, compliance reporting, transaction analysis, and fraud detection. Because SLMs operate within narrowly defined datasets and rule-based constraints, they provide higher predictability and traceability.

Therefore, financial institutions adopt Small Language Models to scale enterprise AI adoption while maintaining strict regulatory compliance and risk controls.

Legal and Contract Analysis

Legal teams also rely on SLMs for clause extraction, policy interpretation, document classification, and contract risk assessment. Teams train these models on legal language, internal precedents, and jurisdiction-specific regulations.

Moreover, the structured and deterministic nature of Small Language Models aligns well with legal workflows where precision, consistency, and defensibility outweigh creative flexibility.

Internal Enterprise Assistants

SLMs trained exclusively on proprietary enterprise data power internal assistants that help employees with policy queries, procedural guidance, and secure knowledge retrieval. Because these models do not depend on public datasets, they significantly reduce data exposure and intellectual property risks.

In Artificial Intelligence in 2026, internal enterprise assistants represent one of the fastest-growing domain-specific AI solutions, reinforcing the strategic role of Small Language Models in scalable and secure enterprise AI adoption.

Artificial Intelligence in 2026

Artificial intelligence in 2026 reflects a clear shift toward maturity, governance, and real-world business impact rather than experimentation. Enterprises now move beyond generic AI tools and actively adopt domain-specific, operational systems that deliver measurable and repeatable value. Moreover, organizations prioritize responsible AI practices, scalable deployment models, and tight alignment with core business processes.

Instead of selecting models based on size or novelty, enterprises evaluate AI systems based on reliability, compliance readiness, operational control, and long-term sustainability. As a result, artificial intelligence in 2026 focuses less on disruption and more on dependable execution that supports critical workflows across industries.

Why Enterprises Are Choosing SLM Over LLM

The evolution of the SLM vs LLM discussion signals a fundamental shift in enterprise AI strategy. Organizations are no longer optimizing for experimentation or proof-of-concept success alone. Instead, in Artificial Intelligence in 2026, enterprises actively prioritize execution-driven, production-grade AI systems. At this stage, reliability, governance, and economic sustainability outweigh raw model scale or generalized intelligence. Small Language Models align closely with these enterprise priorities.

1. Predictable and Deterministic Performance

Small Language Models deliver consistent and repeatable outputs that strictly follow defined business rules, operational logic, and domain constraints. As a result, SLMs significantly reduce uncertainty in mission-critical workflows such as compliance validation, clinical decision support, and financial reporting.

For enterprises, predictable performance directly translates into lower operational risk, higher system trust, and faster user adoption. Unlike the probabilistic behavior often associated with large models, SLMs enable AI systems to function as dependable components of core business processes.

2. Stronger Compliance, Security, and Data Governance

SLMs also give enterprises full control over training data, inference behavior, and deployment architecture. This level of control becomes essential in regulated environments where data sovereignty, auditability, and explainability are mandatory.

Moreover, by operating within tightly governed datasets and controlled environments, Small Language Models simplify regulatory validation, internal audits, and external certifications. Consequently, enterprises increasingly rely on SLMs to support compliant enterprise AI adoption in industries such as healthcare, life sciences, finance, and manufacturing.

3. Accelerated Time to Value and ROI

Because of their smaller size and focused scope, SLMs move through training, fine-tuning, validation, and deployment cycles much faster than large models. Enterprises can transition from concept to production without prolonged infrastructure provisioning or extensive model alignment efforts.

Therefore, this rapid deployment capability accelerates return on investment and supports agile AI adoption strategies. Organizations deliver measurable business value earlier while continuing to refine and optimize AI systems over time.

4. Economically Scalable Enterprise AI Adoption

SLMs scale efficiently across teams, departments, and business units without triggering exponential increases in infrastructure or operational costs. Their predictable compute requirements allow enterprises to plan AI expansion with greater confidence and budgetary control.

As a result, Small Language Models support long-term, organization-wide enterprise AI adoption rather than isolated, high-cost implementations that remain difficult to sustain.

5. Deep Alignment With Enterprise Business Processes

Small Language Models are designed to mirror enterprise workflows, decision hierarchies, and domain logic. This design approach enables seamless integration with existing systems such as ERP, EHR, MES, CRM, and compliance platforms.

Rather than forcing organizations to adapt their processes to AI behavior, SLMs adapt to the organization. Consequently, enterprises achieve smoother integration, higher adoption rates, and sustained operational impact across business functions.

How Is Enterprise AI Adoption Evolving for Artificial Intelligence in 2026, and What Does SLM vs LLM Mean for Custom AI Solutions?

a. Enterprise AI Adoption Shifts From Experimentation to Execution

In Artificial Intelligence in 2026, enterprise AI adoption no longer centers on experimentation or technological novelty. Instead, organizations actively shift toward execution-focused AI initiatives that deliver measurable business outcomes. Enterprises now embed AI directly into operational workflows rather than confining it to innovation labs or pilot programs.

Moreover, this shift reflects rising expectations around accountability, performance, and long-term value creation. Organizations evaluate AI systems based on their ability to support core business functions reliably, securely, and at scale.

b. Operational, Governed, and Business-Aligned AI Takes Priority

As enterprise AI adoption matures, three priorities clearly emerge. First, organizations prioritize operational AI over experimental AI to ensure direct business impact. Second, they favor governed intelligence over open-ended intelligence, driven by regulatory mandates, data privacy requirements, and the need for explainable decisions.

Additionally, enterprises increasingly select business-aligned AI over generic AI. They choose models that reflect internal workflows, policies, and domain expertise. As a result, AI adoption shifts decisively from a technology-first mindset to an outcome-driven strategy.

c. Small Language Models Fit the Enterprise AI Model

Within this evolving enterprise landscape, Small Language Models naturally align with business needs. SLMs deliver controlled, predictable intelligence that teams can validate, audit, and govern with confidence. Their deterministic behavior supports high-trust environments where consistency, accuracy, and compliance remain essential.

Therefore, in Artificial Intelligence in 2026, enterprises increasingly view SLMs as enablers of responsible AI adoption that balances innovation with governance and security.

d. Domain-Specific Solutions of AI Become the Enterprise Standard

Domain-specific solutions of AI rapidly become the enterprise standard. Rather than relying on a single monolithic intelligence layer, organizations deploy multiple specialized AI models tailored to individual business functions such as compliance, operations, finance, and customer support.

These solutions combine Small Language Models, structured enterprise data, and embedded business rules to deliver targeted and reliable outcomes. Consequently, this approach improves resilience, simplifies governance, and enables incremental scaling of enterprise AI capabilities.

e. Modular AI Architectures Improve Scalability and Governance

By adopting modular, function-specific AI architectures, enterprises reduce systemic risk and improve maintainability. Each AI component can be independently optimized, validated, and updated without disrupting the broader ecosystem.

Moreover, this architectural strategy aligns strongly with SLM-based designs. It supports sustainable enterprise AI adoption by balancing flexibility, control, and long-term scalability.

f. SLM vs LLM in Custom AI Solution Development

The SLM vs LLM decision plays a pivotal role in custom AI solution development. Organizations often use Large Language Models during ideation, research, and exploratory phases, where broad contextual understanding and flexibility add value.

However, Small Language Models become the production engines that power day-to-day enterprise workflows. Their domain alignment, governance readiness, and predictable behavior make them ideal for operational use cases.

g. Hybrid AI Architectures Reflect Mature Enterprise AI Adoption

In Artificial Intelligence in 2026, many enterprises adopt hybrid AI architectures. In this model, LLMs support exploration, insight generation, and early-stage innovation. At the same time, SLMs execute validated, governed, and repeatable business processes.

This layered architecture allows organizations to leverage the strengths of both model types while maintaining control, compliance, and scalability. As a result, it represents a mature and pragmatic approach to enterprise AI adoption.

h. The Strategic Implication for Enterprises

The evolution of enterprise AI adoption reveals a critical insight. The future of AI does not depend on choosing between SLMs and LLMs. Instead, success depends on orchestrating them intelligently.

Enterprises that align AI models with real business needs, governance requirements, and operational realities will position themselves to succeed in Artificial Intelligence in 2026 and beyond.

Further, you can find more details.

General Enterprise AI and AI Solutions

- Enterprise AI Solutions: Enhancing Business Operations with Artificial Intelligence – An overview of how AI transforms business operations and supports scalable digital transformation. Enterprise AI Solutions.

- How Artificial Intelligence Solutions Are Unlocking the Future of Innovation – Insights on AI solutions, productivity, and innovation impact. Artificial Intelligence Solutions.

Frameworks, Strategy, and Adoption

- Accelerative AI Framework for Enterprise AI Success – Covers frameworks for faster enterprise AI adoption and measurable value, relevant to enterprise AI strategy. Accelerative AI Framework for Enterprise AI Success.

- How to Build Scalable AI-Powered Applications with Cloud AI Services – Useful for discussions on scalable deployment and infrastructure considerations. Build Scalable AI‑Powered Applications with Cloud AI Services

- Generative AI Use Cases, Capabilities, and Future Trends – Excellent for linking where you discuss LLM capabilities, generative AI use cases, and enterprise trends. Trending Generative AI Use Cases and Capabilities.

AI Use Cases and Industry Examples

- How AI in Industrial Automation Makes You Smarter, Faster, and Better – Valuable for illustrating domain-specific AI in manufacturing. AI in Industrial Automation Use Cases.

- How AI Predictive Analytics Are Enhancing Business Operations – Relevant when discussing analytical and predictive workload integration. AI Predictive Analytics Enhancing Operations.

- The Intelligence Shift in Pharma Manufacturing – Perfect when talking about domain-specific AI adoption in regulated sectors. AI in Pharma Manufacturing Future Trends.

Other Useful Resources

- Salesforce Einstein GPT | Generative AI for CRM – Can support sections on LLM use cases and AI in CRM. Salesforce Einstein GPT for CRM

- Build Your Multimodal AI to Unlock Efficiency, Enhance Customer Experience – Great for connecting discussions on advanced, integrated AI systems. Build Your Multimodal AI Solution.

- Find details of AI Software Development Services and Agentic AI development Services.

- Agentic AI vs Generative AI: Key Differences, Use Cases, and Future Trends.

Conclusion

The future of SLM vs LLM is not about competition, but alignment with business reality. In artificial intelligence in 2026, enterprises are prioritizing trust, control, scalability, and efficiency over raw model size.

Small Language Models are becoming the backbone of enterprise AI adoption, enabling domain-specific solutions of AI that are secure, predictable, and cost-effective. Large Language Models continue to drive innovation and discovery, but SLMs are where sustained operational value is being realized.

For organizations investing in a custom AI solution, the message is clear. The smartest AI strategy is not about bigger intelligence. It is about the right intelligence, built for the right purpose.