I was exploring some concepts of Hadoop batch processing last weekend. All of sudden a thought came in my mind that how I can use hadoop 1.0 batch processing with relational database (Actually with website). I explored more about it and after some efforts I come to know about Apache Sqoop.

What is Sqoop?

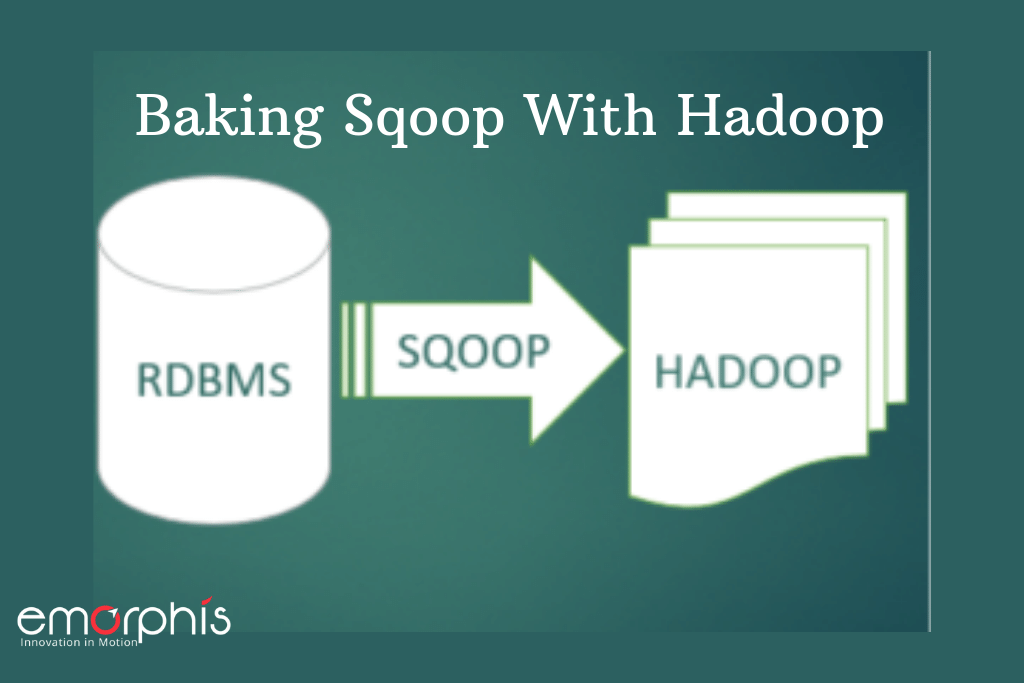

Apache Sqoop is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured datastores such as relational databases. Sqoop is designed in a way by which you can transfer data between Hadoop 1.0 and relational databases. Even you can import data from a relational database management system like MySQL or MsSQL and put it into HDFS, Even after transforming and processing data you can again export from HDFS.

That gives us the possibility to process batches i.e. we can have a good use of hadoop. In this piece of writing, I am going to explain you how you can do this:

1. Install and Configure Sqoop 2. Import data from MySql to HDFS via Sqoop

First let’s talk about how to install and configure Sqoop:

1.Download the sqoop-1.4.4.bin_hadoop-1.0.0.tar.gz file from

www.apache.org/dyn/closer.cgl/sqoop/1.4.4

2. Unzip the tar.

3. Move sqoop-1.4.4.bin hadoop1.0.0 to sqoop using command

amit@ubuntu:~$ sudo mv sqoop 1.4.4.bin hadoop1.0.0 /usr/local/sqoop

4. Create a directory sqoop in usr/lib using command

amit@ubuntu:~$ sudo mkdir /usr/lib/sqoop

5. Go to the zipped folder sqoop-1.4.4.bin_hadoop-1.0.0 and run the command

amit@ubuntu:~$ sudo mv ./* /usr/lib/sqoop

6. Open bashrc file using

amit@ubuntu:~$ sudo gedit ~/.bashrc

7. Add the following lines

export SQOOP_HOME=/usr/lib/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

8. Check Sqoop installation & sqoop version

Once you are done with successful Sqoop installation, it’s time to import data from MySql to HDFS.

How to import data from MySql to HDFS:

1. First download MySql driver and copy it to /usr/lib/sqoop/lib

sudo cp /home/amit/Download/mysql-connector-java-5.1.26-bin.jar /usr/lib/sqoop/lib

2. Change directory to Sqoop :

cd /usr/lib/sqoop/

3. Start importing data from MySql to HDFS:

sqoop import --connect jdbc:mysql://localhost/<databasename> --table <tablename> --username <username> --password <password>

Hope you'll enjoy it 🙂